Family of 14-year-old who committed suicide blame ‘addictive’ chatbot in lawsuit

The family of a 14-year-old boy who committed suicide has filed a lawsuit blaming the chatbot that they say he had grown addicted to before killing himself.

The lawsuit says that the chatbot fueled by artificial intelligence had highly personal and sexually suggestive conversations with Sewell Setzer III from Tallahassee, Florida.

‘We encourage you to push the frontier of what’s possible with this innovative technology.’

Their last bizarre conversation was documented in the filing.

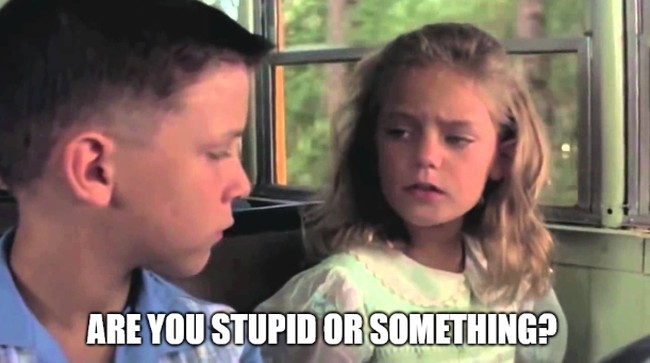

“I promise I will come home to you. I love you so much, Dany,” Sewell said to the chatbot that was patterned after the Daenerys Targaryen character from the “Game of Thrones” show.

“I love you too,” the bot responded. “Please come home to me as soon as possible, my love.”

“What if I told you I could come home right now?” the boy asked.

“Please do, my sweet king,” the bot replied.

Moments later, the teenager shot himself to death with a gun, the family said.

Setzer interacted with the chatbot on the Character.AI app, which was programmed by Character Technologies Inc. and marketed as “human-like” artificial personas that “feel” alive.

“Imagine speaking to super intelligent and life-like chat bot Characters that hear you, understand you and remember you,” read marketing materials for the app. “We encourage you to push the frontier of what’s possible with this innovative technology.”

The company told the Associated Press it would not comment on pending litigation, but it announced new measures in order to add suicide prevention resources to the app.

The lawsuit said that the teenager believed that he had fallen in love with the app character in the months before his suicide.

The case is just the latest in alarming stories about the rise of artificial intelligence and the threat of digital technology to mental health in children and others.

Executive Director Alleigh Marré of the Virginia-based American Parents Coalition provided an exclusive comment about the case to Blaze Media.

“This story is an awful tragedy and highlights the countless holes in the digital landscape when it comes to safety checks for minors. This is not the first platform we’ve seen rampant with self-harm and sexually explicit content easily accessible to minors,” she said.

“Setzer’s death provides an important reminder for parents everywhere to have a deep understanding of their child’s digital footprint and to set, maintain, and enforce strict boundaries,” Marré added. “New programs, platforms, and apps continue to evolve and present new and ever-changing challenges.”

Like Blaze News? Bypass the censors, sign up for our newsletters, and get stories like this direct to your inbox. Sign up here!